Micron to Capture 10-15% HBM Market in 2024/2025, SK Hynix Sees 60%+ Surge in Demand

SK Hynix, Samsung, Micron

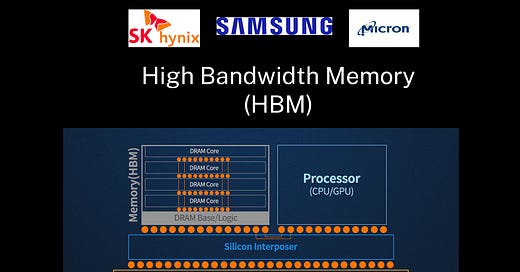

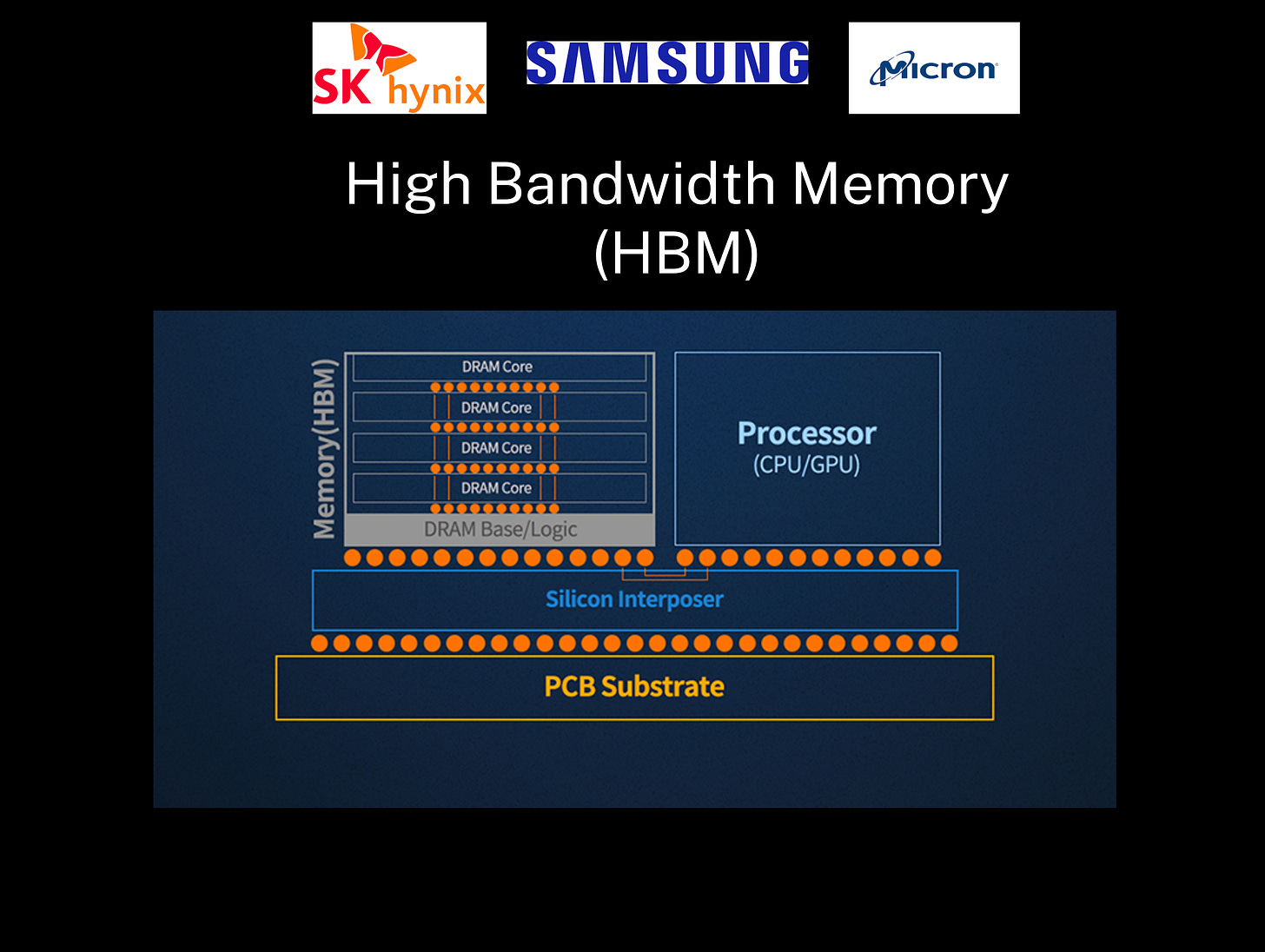

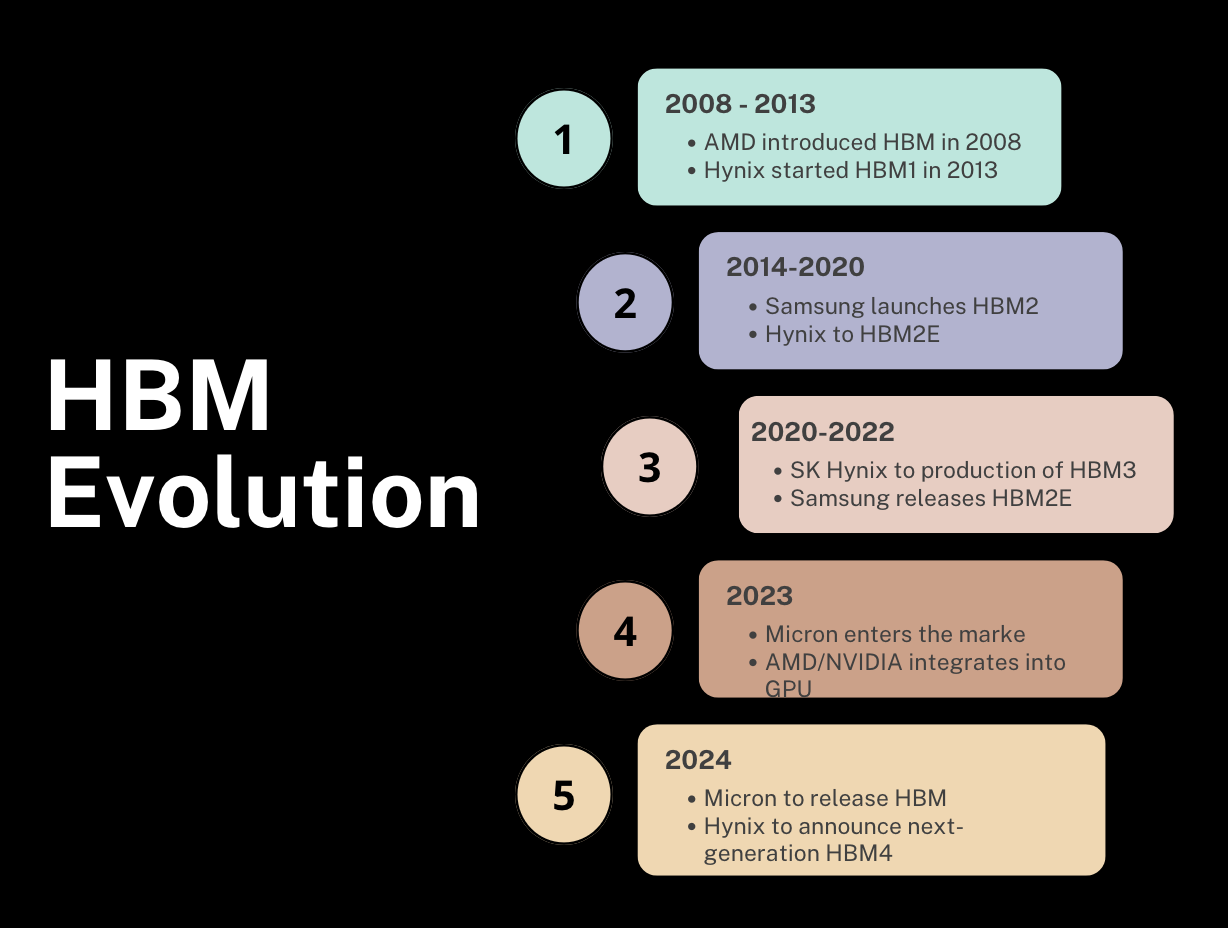

As we have discussed in Part 2, High Bandwidth Memory (HBM) is increasingly gaining traction in the world of AI computing. NVIDIA and AMD have already integrated HBM in their AI chips. This rising interest is naturally leading many of our clients to inquire about Micron and the potential benefits of investing in their HBM technologies. Their entry into the HBM market signals a potential market share shift to capture 10-15% in late 2024/2025.

In December 2023, Sanjay Mehrotra, CEO of Micron, stated, “We are in the final stages of validation to supply HBM3E for Nvidia’s next-generation AI accelerators.”

In this article, we will go through:

HBM: Evolution and Comparison with GDDR and LPDDR memory

Three Players and Why Micron is Positioned to Capture 10-15% of the HBM market

Supplier Roadmap 2024-2026 to NVIDIA

Please note: The insights presented in this article are derived from confidential consultations our team has conducted with clients across private equity, hedge funds, startups, and investment banks, facilitated through specialized expert networks. Due to our agreements with these networks, we cannot reveal specific names from these discussions. Therefore, we offer a summarized version of these insights, ensuring valuable content while upholding our confidentiality commitments.